Some Experiments into How Google's Crawler works

Why experiment with Googlebot Beyond the fact it is interesting to understand how it works, it is potential useful if you ca...

Expert insights, guides, and tips to improve your website's SEO performance

When developers go live on production then the site should be working properly, this includes user experience and on-site SEO issues. But here you often hit an issue - how do you check a site when it's not live on the production server? Often times sites are in a staging environment first but these are generally hidden behind http authentication to keep nosey web surfers and crawling bots out.

A new feature in The Crawl Tool solves this problem by adding the ability to set a username and password for http authentication. It can then use this to crawl your staging site to check it for issues. Here's how...

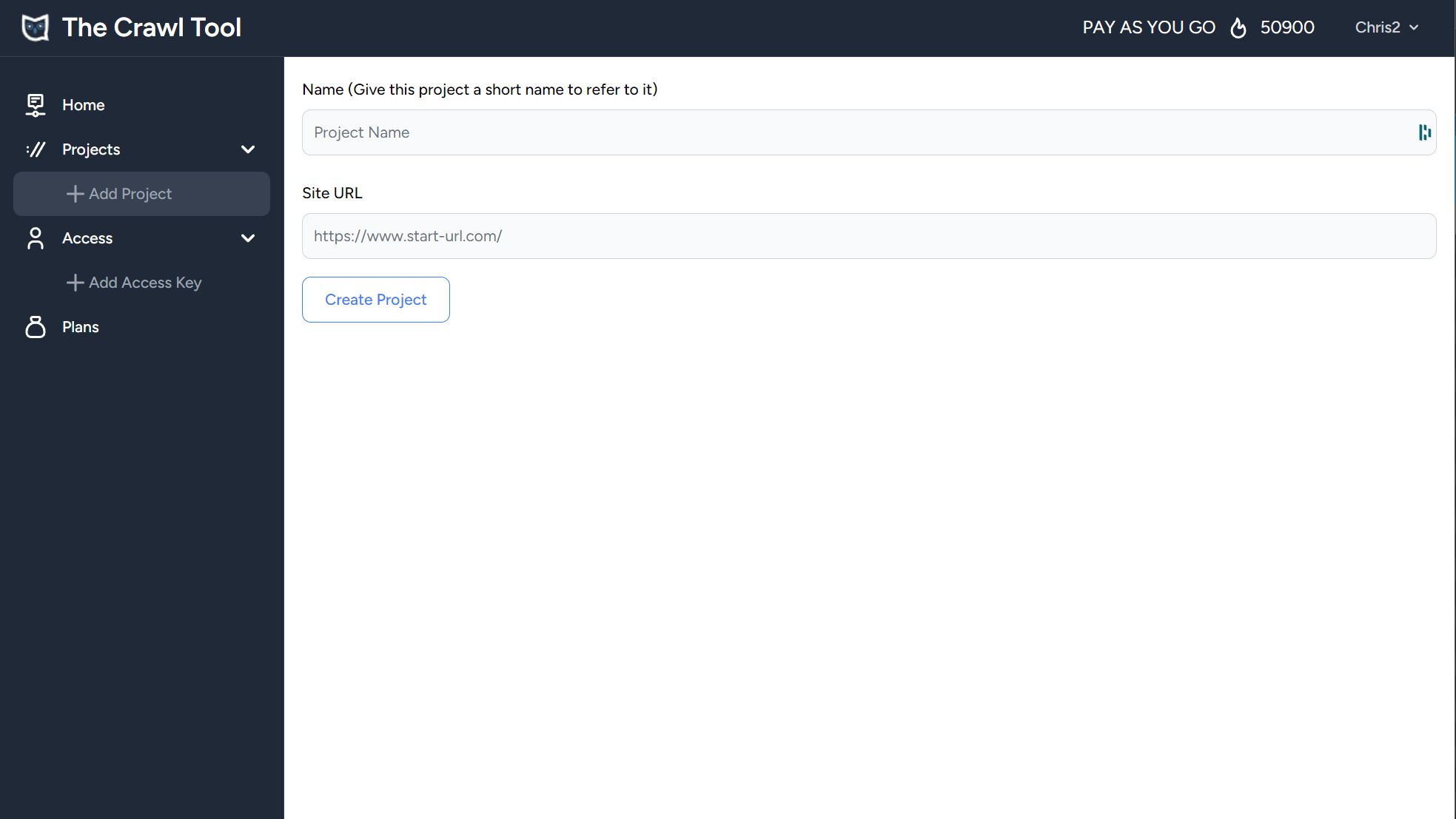

When you're creating a project (through the "Add Project" left menu item), fill in a name and the url as usual.

When you click "Create Project" the crawl tool will check the URL you entered. Because the server will respond asking for a username and password, The Crawl Tool will adjust the form to ask for these.

It's as easy as filling in the correct username and password for the site and then clicking the Create Project button again. This time the tool will perform the check using this username and password, which should create the project.

When a crawl is performed then The Crawl Tool will also use this username and password to crawl the site and gather the data. Bingo! You get The Crawl Tool user experience and on-site SEO reports even on a staging site behind a username and password.

Start with a free crawl of up to 1,000 URLs and get actionable insights today.

Try The Crawl Tool Free

Why experiment with Googlebot Beyond the fact it is interesting to understand how it works, it is potential useful if you ca...

LLMS.TXT again I've written about LLMS.TXT in the article about how getting one listed in an llms.txt directory mysteriously...

What's this about Adding Other Media to robots.txt I recently came across John Mueller's (a Google Search advocate) blog. I ...

Understanding the Importance of having a fast Mobile website I, personally, spend a lot of time focusing on site speed. The ...

What are robots.txt, sitemap.xml, and llms.txt These files are used by search engines and bots to discover content and to le...

AI Crawlers and Citing Sources The rise of AI, rather than search, crawlers visiting websites and "indexing" information is ...